Cursor without rules

Yes, you can use Cursor without rules

Is Cursor useful without rules?

One of the questions to which I wanted to find an answer was whether simple prose rules are enough for getting good results out of Cursor.

The promise of simple prose rules is that:

they are portable and not tied to a specific tool,

they are written in an understandable format and can be edited by hand

To answer this question I set out ot write a small project from scratch – or rather, have Cursor write it for me.

The rules of the game

I really only set two rules for myself:

No artisanal code – I’m not allowed to commit any code written by hand – all committed code needs to be written by the agent

No Cursor rules – Recurring mistakes need to be encoded in rules written in docs/tech.md

This way I hoped the experiment would give me a better understanding of the limits of LLMs and coding agents.

The result

First of all – I got working software!

It meets the criteria set out in the initial specification.

I’ve delayed writing a project like this multiple times, because “having to figure out OAuth with Gmail” was enough of a hurdle for me to never overcome.

Cursor mostly stuck to my rules, despite them just being in a Markdown file.

The one it broke consistently: “no mocking” – it ended up using Python’s MagicMock in a bunch of situations to stub out interactions with Gmail.

The process

Writing the software felt like turning a crank:

Open plan, copy LLM-generated LLM prompt,

Set up context for Cursor in Agent mode,

Submit

Do something else

Check in later – the agent reached a stable state, ask it to commit the changes

Occasionally I’d run the tool to verify everything works in a non-test environment, run into issues, and have Cursor fix them.

Tweaks discovered along the way

Bake rules into the prompt

Compare these two prompts:

Review the Gmail CLI codebase for quality and consistency. Run 'uv run pyright' for type checking, 'uv run ruff check --fix' for linting, and 'uv run ruff format' for code formatting. Check that: 1) Type hints are used everywhere with no Any types, 2) Error handling uses custom exception hierarchies defined in errors.py, 3) Code follows dependency injection over global state, 4) All business logic has unit tests, 5) All I/O operations use async/await. Fix any issues found to meet these technical guidelines.

And:

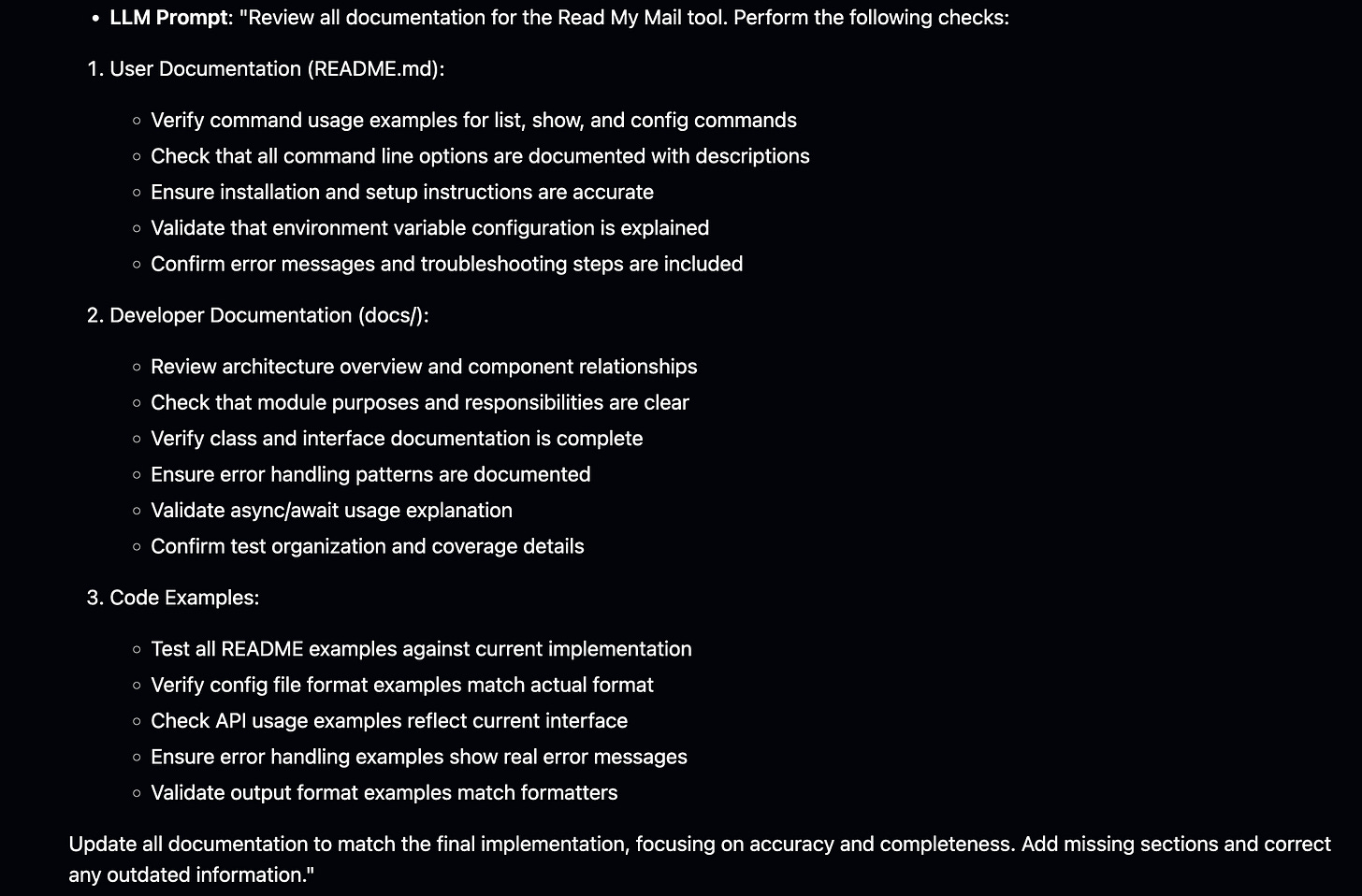

The first one is generic and short, the second one very detailed.

When I asked the LLM for a specific plan, I also asked it to include prompts like the first one.

Usually I’d go ahead and just paste them directly, and it worked out well enough.

I’ve noticed that these prompts actually reflect the guidelines set out in the tech document nicely.

Towards the end, I wanted to get more out of this, so I just selected a prompt in the plan document, and asked Cursor to make it more detailed based on the development guidelines.

The longer prompt worked better, because it was more specific.

In hindsight I should have done this for every prompt.

Save your prompts

I would find myself asking essentially the same question multiple times, and after doing this a couple of times, I started to commit prompts to the repository.

These prompts correspond to tasks I need to perform regularly:

fix newly introduced test failures,

make sure user-facing documentation actually reflects the code,

test adherence to coding standards.

Keeping these prompts close by in the repository made it easy to perform these tasks more frequently and especially when fixing test failures, the model would sometimes prefer mypy over pyright for type-checking.

Since the “fix the tests” prompt explicitly mentions which tools to use, the LLM doesn’t pick the wrong tool anymore.

Next steps

This was a small project, using essentially too few rules.

Going forward, I want to introduce more rules (~50 to 100 would be good) and see how it affects performance of the LLM – will it make fewer mistakes?

Until then, have fun reading the code.