Grow your own agent

...build on what Thorsten encouraged you to do

If you haven’t done so already, take 60 minutes to type out Thorsten’s widly popular guide on building a minimal agent.

It really doesn’t take long.

This post is not about agents being essentially simple while loops.

No, this is about what happens after.

Because with the simple toolbox given to you by Thorsten you now have a self-modifying agent.

Let me repeat that: with three tools, the agent can modify itself.

You can grow the agent into one you like to use.

Unlike Cursor, it can be yours.

You can experiment freely.

And most importantly, you understand, on a deep level, what’s actually going on under the hood.

My goal with this project was to see how far I can push developing the agent developing itself into a more an more capable version of itself.

But first things first: if you want to play or read along, the code is here:

https://github.com/dhamidi/smolcode

Mind you, this is a messy construction zone, not a pristine temple of software engineering best practices.

⚠️ Warning: the following is raw, messy, unedited and unscripted.

Watch smolcode cleaning up messy workspace files and highlight a bug with my implementation of caching prompts:

The point of this is not to impress you, but to get you to build your own.

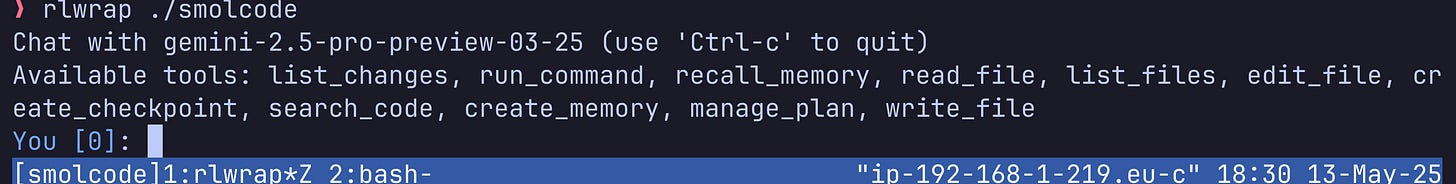

Building your own allows you play around with all kinds of things, just look at all these tools:

On top of the original read_file, list_files, and edit_file we have:

create_checkpoint for writing detailed commit messages based on changed files as reported by list_changes

create_memory and recall_memory to remember things about the project (e.g. the build command)

manage_plan for making plans, reordering tasks, etc without needing to mess around with markdown files

and finally write_file because sometimes Gemini is failing hard at replacing strings in files.

That’s more than enough to get a working agent.

Since demos are few and far between, let’s debug the caching issue – notice how Gemini repeated that it “already removed files” and “already committed” changes.

That’s because I’m using prompt caching, and most likely I’m using it wrong.

Let’s run a simple conversation with tracing enabled to get more intel:

Now I could analyze this myself to answer the question whether there’s an issue with cached requests sending duplicate prompts, but where'd be the fun in that?

After copying the contents of the interaction to `transcript.txt`, let’s ask smolcode to reflect on its nature:

Here’s the outcome of the analysis:

Therefore, I do not see evidence that the *same user input* is being sent and processed twice *within the same turn*. The transcript shows that the full conversation history, including previous user prompts, is sent with each new request, but the latest user prompt is unique in that request.This is good enough for now to continue experimenting without worrying too much about caching.

How to work with an unleashed model?

Cursor is doing all kinds of things behind the scenes to reduce/distill the amount of context that is sent to the model.

Smolcode embraces the model and is eager to burn through tokens.

How does the workflow change?

In an ideal world, an interaction with smolcode looks like this:

You: Study instruction.md

Gemini: Here's my plan ...

Tool: read_file

Tool: read_file

Tool: edit_file

Tool: edit_file

Tool: create_checkpoint

Gemini: I'm done

You: What’s interesting here is that after giving it the initial prompt (here in instruction.md), it just goes off on its own until it thinks its done.

Meaning we get to do other things in the meantime, like managing another instance of the agent.

Let’s build a feature

Now let’s ask smolcode to build a feature that we need: persistent conversation histories.

We’ll start by drafting a specification together with smolcode and then in a separate conversation, we’ll turn it into an executable plan and execute the plan.

Since the session is rather long, here are some highlights:

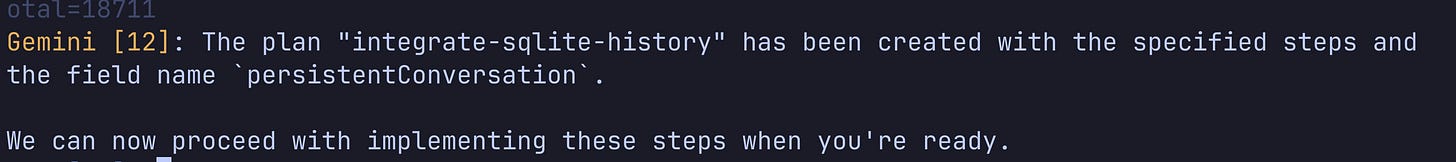

the detailed instructions paid off and the model actually produced a workable plan for persisting conversation histories

while it was busy implementing the plan, I got bored and thought about the next problem: how are we actually going to use the history?

to that end, I started a new session in another tmux pane to make a new plan for integrating the history with the agent

intermittently we ran into rate-limits / opaque internal errors that we just had to sit out with retries,

Gemini messed up with editing the history_test.go file and goimports couldn’t format it. It got itself out of that predicament.

🥂 Cheers to uncertainty

At this point I have no clue what we just built.

Or how whether it works.

I could review the code now, but since I expect it to change yet again, I’ll delay my review.

Instead, let’s throw more tokens at it and ask the agent to figure this out.

The outcome: we have the history package but are not using it in the agent

The reaction: ask the agent to plan the integration.

But first it needs to fix the tests:

Now that the tests are working, we can continue with the integration.

Except that the tests aren’t working the way we think.

Running the tests actually overwrites the database file used by smolcode for storing history, because the initially envisoned API had no notion of multiple database files.

This is fixed off-screen in the same manner as we’ve done so far.

Time for a review

It worked 🎉

The code we ended up with is pretty straightforward, thanks to a thoughtful first prompt.

A few comments were superficial, but we could let the agent take care of that.

Where next

If you have made it this far, then here are few ideas of where this agent can go:

evolve `write_file` into a `generate_code` tool, using a different model,

give the model access to Perplexity’s web search API to get up to date information about frameworks and libraries,

create a `tmux` tool that allows the model to manage multiple interactive programs

All of these tools are just example of interesting questions to ask.

The answers need to be found through experimentation.