How I work with subagents

...or how I rarely use more than 50% of the available context window anymore

When I started working with subagents, I first viewed them as a convenience to produce more.

It is easy to tell the model to use many subagents in parallel and then watch your coding agent call N times as many tools in parallel and get a sense of satisfaction from this.

While this is a clear benefit when you already have a plan with easily parallelizable chunks of work like adding new UI components in the frontend and a matching API endpoint in the backend, I found subagents to offer greater value when using them for long sequential tasks.

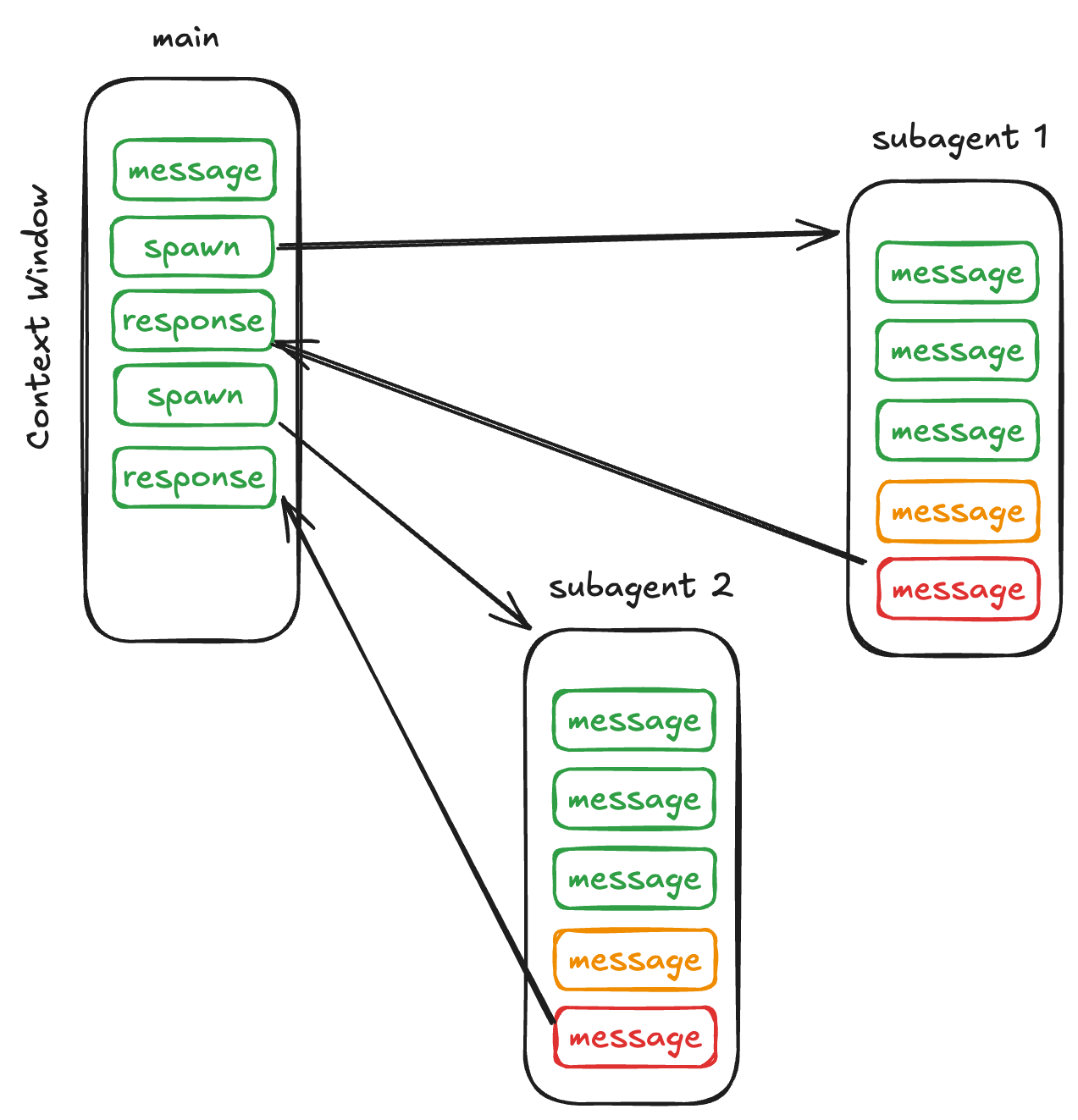

The key to this is that every subagent gets its own context window.

With a bit of care while prompting the agent in the main conversation, you essentially keep progress information in the main agent context, while small implementation decisions are encapsulated in the subagent context windows.

This “progress overview” in the main conversation helps with keeping the subagents on track and surfaces gaps in your initial prompt - I usually notice this when the subagent made a decision that was not in line with the plan given to the main agent.

Following this process I have gotten 5-10 subagent calls done within the context of one main agent conversation, which for the most part felt like turning the crank of a manual coffee grinder.

The crank-turning prompt usually looks like this:

Study @docs/implementation.md, the plan is X

Where docs/implementation.md describes the general development process and how the input is used:

Study the plan in X.

Find the next incomplete step.

You must ask the user whether this step is correct and you should proceed.

Then, if the plan is correct:

1. Prepare a test for the step

2. Run the test and watch it fail

3. Implement a fix

4. Run the test again

5. If it fails, update the implementation until tests pass

6. When done, report back to the user.

Use a subagent for each step, running the steps in sequence, one at a time.

With this in place, the main conversation mostly looks like this:

Me: Study …

Agent: can I proceed with step X

Me: Yes

Agent: I am done - <long report>.

Me: Commit; Study …

…

I found this process to give good control over results, while increasing the time the agent works independently to multiple minutes before another check-in is required.