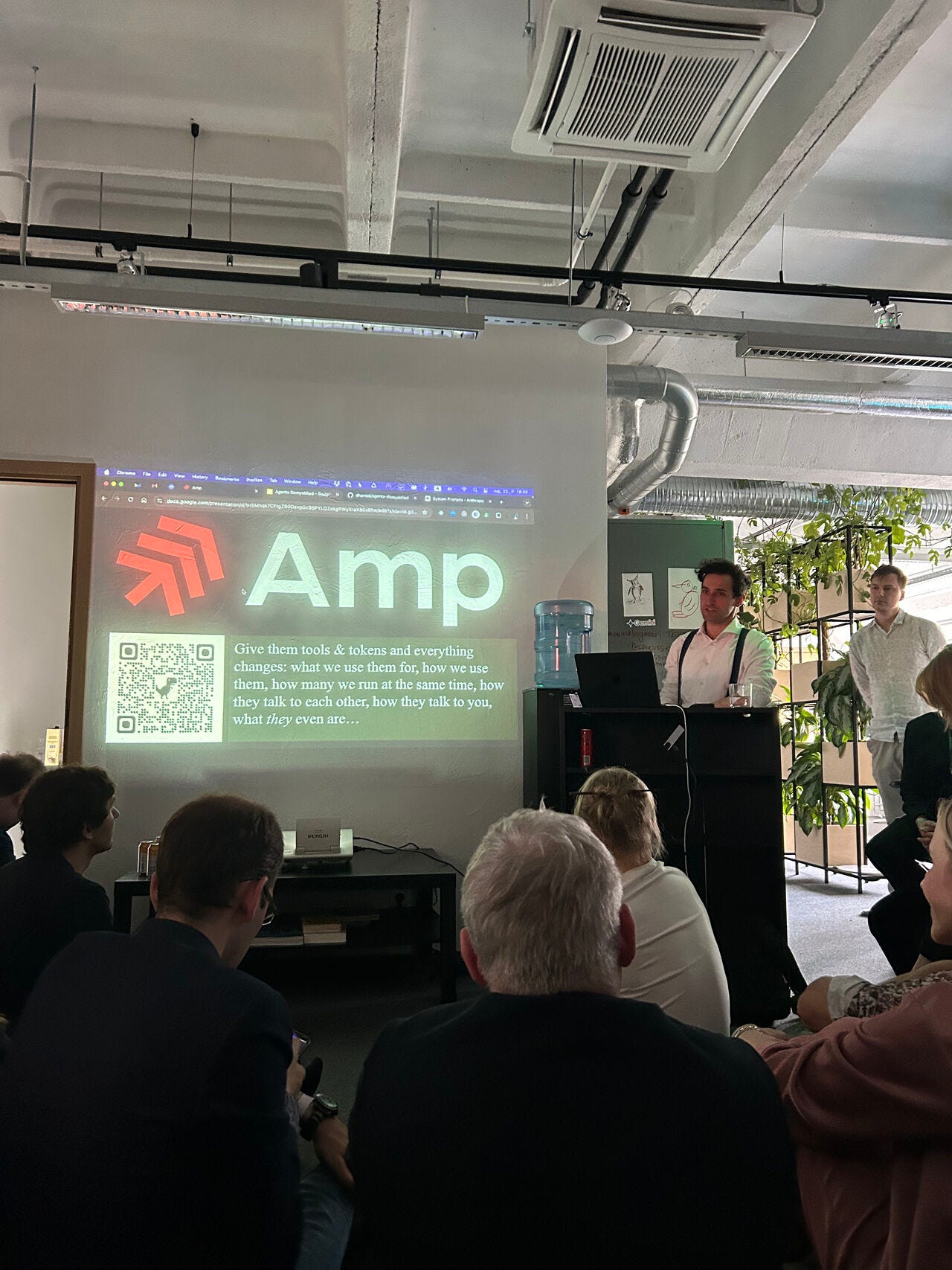

⏩ joining Sourcegraph to work on Amp

Everything is changing

Moving forward

Today marks my first day out of Modash.io and the first of 7 days before starting at Sourcegraph to work on Amp - an agentic coder that does not try to protect your wallet, in exchange for better results.

This means that staying abreast of the tectonic plate movements with their associated volcanic erruptions in software development is now my bread and butter.

Things I learn, I’ll share here.

Seven Days of Building

In this 7 day period between two engagements, I have many questions to answer:

Will deterministic building blocks improve LLM performance?

How will an LLM perform, if all actions you want to take in the development of a project are accessible through a CLI / MCP server?

Put the precise bits into deterministic machines, leave the fuzzy bits to the LLM.

I really need to find out and the only way to do so it to build something.

A few more days and I should have something presentable (code is already public, but it’s very much WIP).

What is key to managing multiple agents at the same time?

The agentic software development endgame seems to be managing multiple agents with a lot of input at the beginning of the process, and more at the end, with the agent quietly chugging away and working its way through the problem.

Right now it still requires oversight, and I’m trying to figure out how to best manage that process for the time being.

Yesterday was a success by leveraging extensive plans, git worktrees and tmux to run two instances of the Amp CLI.

I could work through two big features in parallel, generating around 5000 lines of code in the process.

Half of that was essentially what I would have written, the other half needed more babysitting because I wasn’t clear enough in my specification.

What constitutes a good test?

My notion of test quality is changing – I’m relying more and more on end-to-end test, exercising the application fully (which is easy for my toy projects) so that the LLM gets the necessary context to figure out what’s wrong.

The issue with e2e tests is finding out what’s wrong exactly, when things go wrong.

But with LLMs in your toolbox, this is less of an issue now - they excel at reading logs and analyzing root causes of failures.

So I find myself building more and more little helper programs that poke, prod and exercise a system in an LLM friendly manner.

I call these things “inspectors” because of what they do – the notable difference to e2e tests is that you can point them at any instance of the system.

They are not built using test frameworks, because they are not tests, they are tools.

Mind you, this is in addition to regular unit and integration tests.

Observations so far

When I get a new computer, I like to set up things from scratch to only select things as part of my workflow that make sense.

Here’s what I’ve learned in the process:

I write lots of tiny custom utilities now, in Go, because it has become so easy. These tools cater to my preferences, like this URL opener for tmux.

I learn more – the threshold to action has lowered so much, that I do more as a result. For example, I’d like better Finder integration for Markdown and JSON files – they should automatically open in a new terminal window with a dedicated program (e.g. nvim). Turns out that’s easy to do, but only thanks to LLMs I bothered to find out exactly how to do it.

My notion of quality is changing more towards evaluating constructs as black boxes – I care more about how the cake tastes than about how it’s made.

That’s it for today, more content is on the way!

Congrats Dario! I’m really interested in this your new project and going forward I’ll expect to learn more from you on these topics!