Not All Gems Are Rubies

A peek into a parallel branch of software evolution

Intention

Maybe I spent too much time listening to DHH but one point from that interview stuck with me: there is beauty in programming, unfortunately we often forget about it.

Beauty of course is in the eye of the beholder, but simplicity is not.

Over time I’ve come to associate these two notions with the shortest path from intention to execution.

Good tools, including programming languages, libraries, and frameworks make this path as short as possible for the common case, while exposing the necessary knobs and levers to make more veering off that path possible.

I chalk the enduring success of command languages like Bash up to this property.

Nobody wants to type require("fs").unlinkSync("temp.md") when they can type rm temp.md instead.

The rm command does much more than calling unlink(2) under the hood, like supporting removing both files and directories and protecting against accidental destruction with interactive prompts.

All of these features surface on the intention layer of the command: adding -r makes rm remove files recursively, -f turns off any kind of approval prompts.

"I want to remove all files here, don't ask, I'm sure"

becomes simply

"rm -rf ."

Every character is imbued with meaning in this command, there is nothing that can be left off without losing information from the intention.

This is beautiful.

It pushes all of the complexity of achieving a goal into a blackbox you don't need to worry about, unless building that blackbox is your job.

It is abstraction that is so powerful by pulling us away from unnecessary detail.

Finding the right abstractions is hard, and you know when you have found the wrong one when the abstraction actively gets in your way and makes achieving your goal hard.

Ruby on Rails made waves when its latest release included an authentication generator, with DHH half-jokingly proclaiming on stage that he artisanally crafted every line of code of that generator and that core functionality needn't be outsourced to third parties if implementation is easy and you can own the implementation.

And easy it is: rails generate authentication is the shortest path from intention to execution again, creating 15(!) files in your project, in the right places with the right content.

Notably, the generator stops just shy of a full system: your application still needs to create users, and you’ll have to build the signup page.

These “gaps” are key: there a many possible valid solutions to both of these problems, and providing one out of the box is guaranteed to be only sometimes right.

"Should you store cryptographically secure password hashes in your database" on the other hand has only one objectively right answer and is thus included as part of the generated code.

Generating the code and writing it directly into your project gives you a naked abstraction without a protective layer: you can just go ahead and modify the code to your needs. You have the power to do so, with the accompanying responsibility.

It gives you a clear path to ownership.

AI

Agentic coding tools are great when employed for the right purpose.

DHH advocates for asking ChatGPT to learn more about a subject, but actually type out all of the code to learn yourself.

He used this technique to finally learn Bash properly when creating Omarchy, his for-developers Linux distribution.

At first this sounded silly to me.

Kind of like when tailwindcss was new and everyone who learned CSS was scratching their head wondering about how this could possibly be a good idea.

Well, I tried the suggestion in the meantime in an area outside of my expertise and oh boy did it help with learning.

It's the best tutor I didn't know I needed.

Learning and execution are different modes of operation, requiring different approaches.

Having Amp conjure up an executable toy example of a problem I’m trying to understand is a great tool when I'm at a stage of not yet knowing what I want.

It encourages progressively going deeper, until the picture is finally clear in my head.

Clarity about inner workings is essential to developing your own sense of intention.

Clear intention is what enables successful use of agentic coding tools: without knowing what you want, you cannot transfer your intention to the eager code writing machine.

Exchanges with LLMs aren't one-sided and gaps in your intention and understanding are surfaced when you feel unsatisfied with the output generated by state of the art models.

More often than not it's not a shortcoming of the model, but a few critical bits of information missing from the data provided to the model.

TCL

TCL is short for Tool Command Language.

An old version of it is pre-installed on your Mac.

Development started in the late 80s, when the Internet was essentially limited to academia.

A language like TCL is what happens when you make ease of abstraction and meta-programming guiding principles, while still maintaining a simple syntax.

TCL syntax is limited to the bare essentials necessary for expressing intent, and evaluation is delayed until the very last moment.

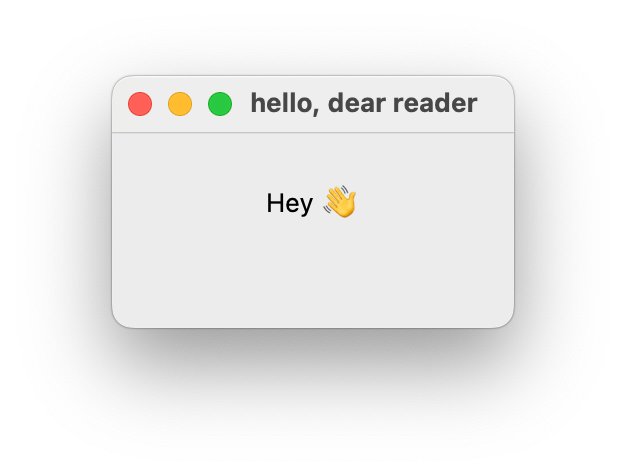

If you run this in the wish interpreter, you’ll get a native UI window in four lines of text:

wm title . "hello, dear reader"

label .message -text "Hey 👋" -padx 24 -pady 24

pack .message

focus .This sits very high on the tower of abstraction, and that is what made the language so attractive to me.

For the last four weeks, I’ve been idly playing with it, and it has lured me in deeper and deeper with it’s ability to strip down code to its bare essentials.

In my quest for knowledge, I learned that there is a whole parallel universe running on mostly native code, with a sprinkle of Tcl on top:

sqlite3 started as a Tcl extension,

there’s a git-compatible version-control system called Fossil which ships half of GitHub’s features in a 4 MB native binary,

Tk which makes GUIs like the one above possible is native glue between the operating system’s native widgets and Tcl,

almost the entire community-provided code just ships with Tcl in the form of tcllib (imagine installing all npm packages alongside node),

the main source of information is the wiki where information is interspersed with comments from users.

After the initial shock of "this is weird", I noticed that this encourages an engineering culture which:

values backward compatibility,

fearlessly relies on the system package manager for dependency management,

is mindful of performance,

and encourages narrow-but-deep modules.

The latter is no coincidence, as the author of “A philosophy of Software Design”, the creator of TCL, and the Raft distributed consensus protocol are the same person.

With AI making code generation cheap, especially the formulaic kind that is necessary for creating programming language bindings, this culture and the associated software stack is suddenly viable again.

This raises a couple of questions:

is generating bindings for libraries a skill that you can get better at, or can this be done fully automatically without much oversight?

which technologies become viable now that weren’t before? Maybe Ruby is fast enough for more things, when you can easily port over some modules to native code.

how important are external dependencies when you can get the precise subset of the functionality you need in code you control?

I intend to find out by writing a small command line utility in C with a sprinkling of TCL on top.

Just to see how: how will this feel like?

Maybe it’s good, and we’ve just forgotten.