How I use aider

No, you don't need to write your own agent

You can stop reading now and check out aider.

No really, go check their website or, even better, play around with it yourself.

What is aider?

At its core, aider runs in your terminal, reads prompts from you, and changes files in return.

The screenshot at the beginning of the article shows the entire 78 lines of Python code necessary for this.

Well, that’s how it started.

Over the last ~2 years, it got a whole range of features:

linting,

testing,

voice input,

token analysis

and much more.

How is it different from Cursor?

What’s notably absent compared to Cursor:

no tool calls,

no MCP support,

no behind-the-scenes “we manage the context for you” magic

no tool use prompts

You see, Aider does something special: it essentially encodes the higher-level feedback loop that you go through when developing software:

write code,

write tests (or write tests first if that’s your cup of tea),

run linters and formatters,

run tests,

repeat until tests pass

We only need the LLM for step 1 and 2.

Cursor is your virtual pair programming buddy.

Aider is a sharp knife in comparison.

Why does it matter?

A while ago, I wondered whether I needed to encode this high-level feedback loop myself by writing an agent:

Now I know that I don’t – Aider is the program I would have written.

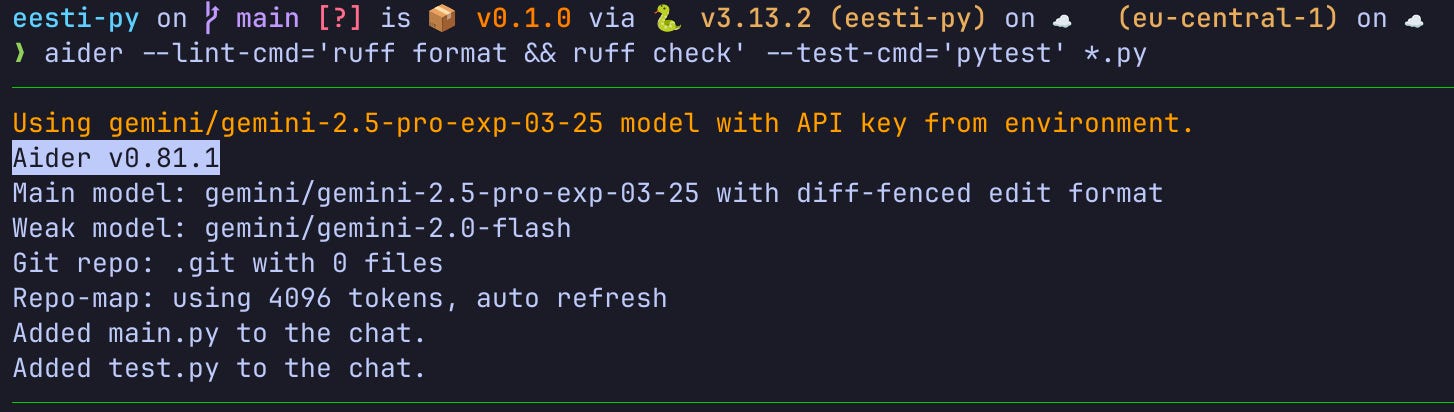

This command invokes aider in a Python repository with the linter and tester preconfigured.

As a result, the LLM does not have to figure out how to run the tests.

When you use Cursor, the LLM needs to think to get the idea that maybe now is a good time to run the tests – aider does this automatically, which makes the process much faster and cheaper, because you save on LLM requests.

Just look at this – this prompt:

> Write a comprehensive test suite in test.py for a function that declines an Estonian noun when provided with the four base forms of a noun as an array: nominative singular, genitive singular, partitive singular, partitive plural.

Yielded lines and lines of code:

Okay, I will write a comprehensive test suite for the Estonian noun declension function in test.py. This suite will use pytest and include tests for various noun types and all 14 grammatical cases in singular and plural.

Since the function decline_noun doesn't exist yet in main.py, these tests are expected to fail until the function is implemented.

Here is the SEARCH/REPLACE block to populate test.py:

Note last bit: Here is the SEARCH/REPLACE block

Aider asks the LLM to provide diffs and then parses the response to figure out what replacements to make. It’s super primitive compared to tool calling, but it also means you don’t need to wait around for tool calls with the associated back-and-forth communication between your client and the LLM provider.

And now the icing on the cake:

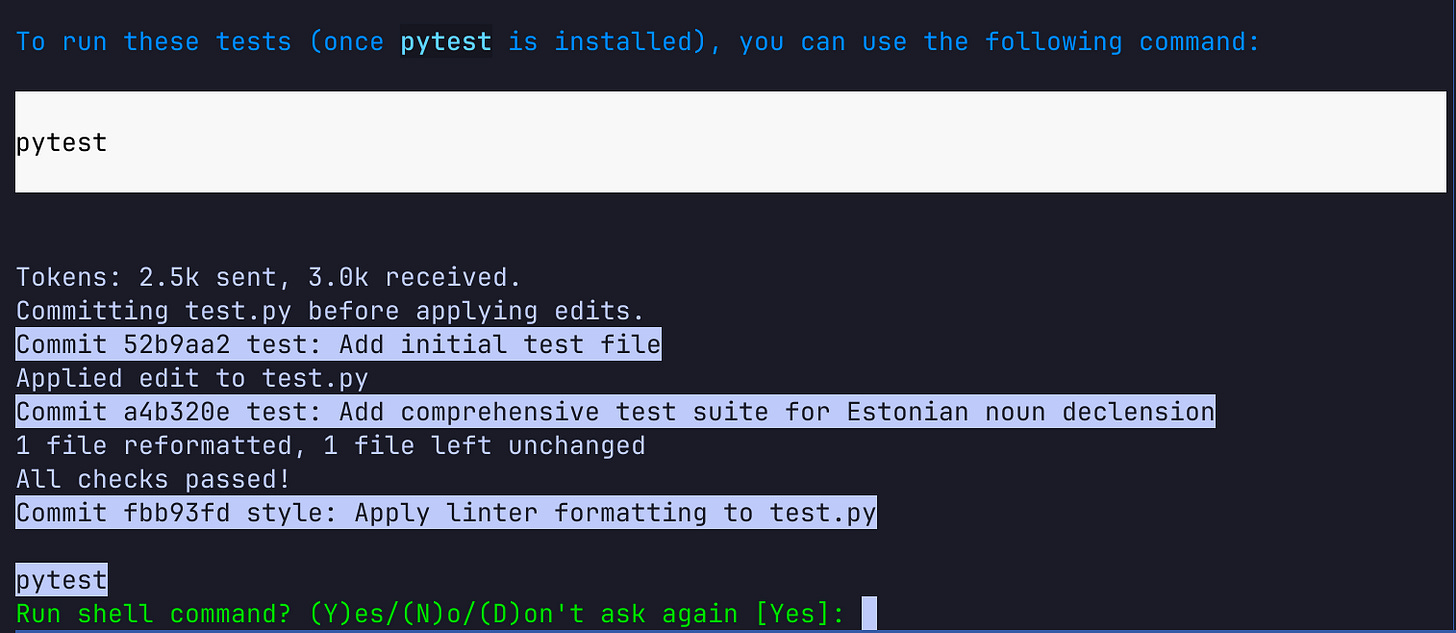

You can see that it:

automatically committed the changes,

ran the linter (“All checks passed”)

and is ready for you to run the tests

If you hit enter, it runs the tests and asks you whether you want the output in your context window:

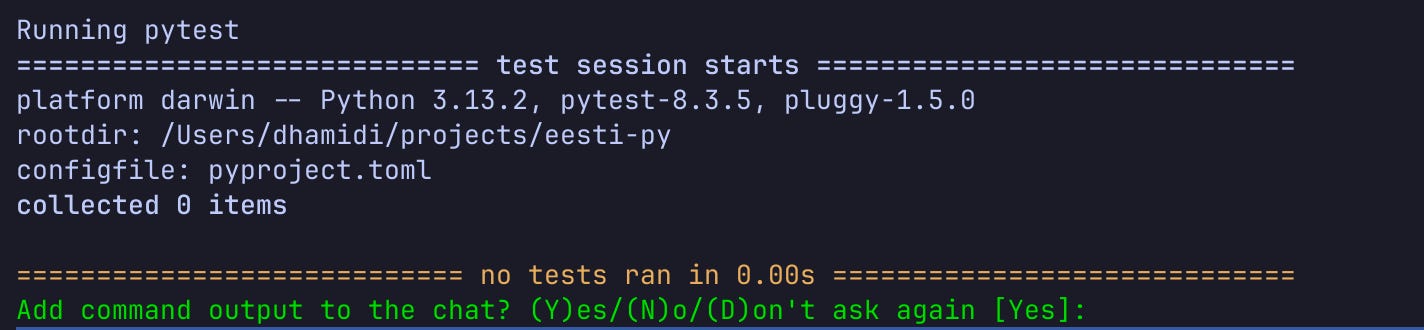

In this case, I don’t, because I messed up the pytest integration, and pytest doesn’t pick up the newly generated test file automatically.

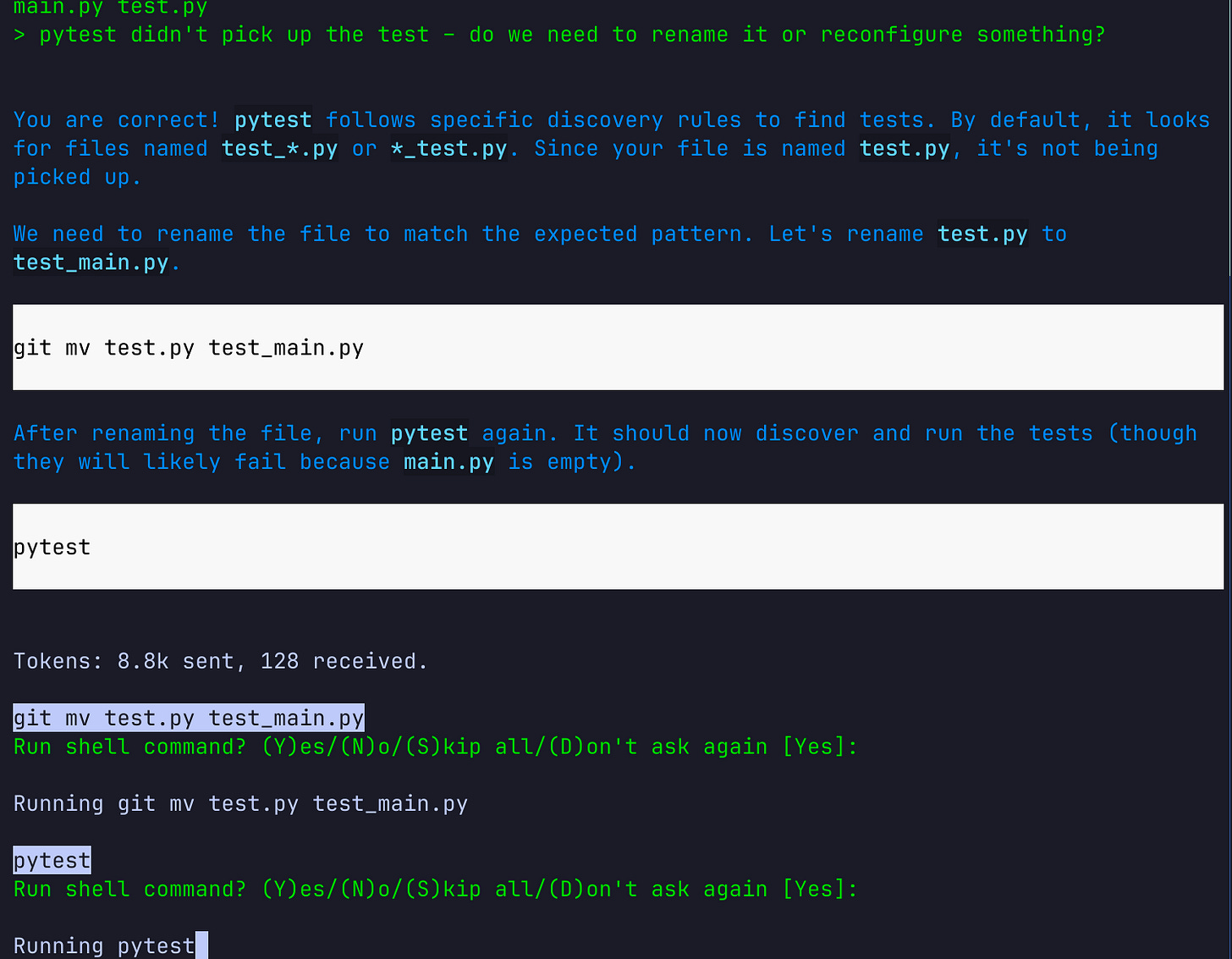

Fixing this was again easy:

Aider commits all changes automatically, as checkpoints so going back to a prior version is easy.

If you make changes outside of aider running /commit will commit them to git with a message following conventional commits.

On slowness

After using Aider extensively for a side project, together with Gemini’s humongous context window, going back to Cursor feels so strange.

Cursor is great at cranking out code without oversight, i.e. it doesn’t “wait” for you to take a turn unless you tell it to.

Which on one hand means you're “faster” because you can twiddle your thumbs while Cursor is working hard.

With Cursor, you wait on the order of minutes before your input is required.

Aider, on the other hand, will be busy on its own for 60 seconds at most.

You stay engaged.

You keep evaluating and feeding it input.

So far, my impression is that I can go faster that way – I’m not waiting for the LLM to come up with obvious things.

My workflow right now

With LLMs I treat planning documents as code, so the first part of working on anything is “coding up a plan”.

I add all relevant design documents from docs to the context with /add docs.

Then I ask for the feature, and specifically a plan for implementing it.

Each step of the plan needs to include:

a definition of done,

names of files/function signatures that the model would touch

Aider writes that plan directly into `docs/plan-<feature>.md`

Then I take that plan, add it to the context together with the files we’ll be working on.

From that point on, my job is to turn the crank:

Implement step 1 of the plan, and mark it as done in the plan document.

⏳ aider is busy working, I’m glancing over the code as it swooshes through the terminal.

It then runs the linter automatically, and depending on whether I need it or not, I ask it to run the tests with /test.

Then I keep turning the crank:

Continue

The process repeats until we’re done with executing the plan.

For larger features, I check out a separate branch because Aider commits are very granular.

Have you tried to create an implementation plan using https://github.com/eyaltoledano/claude-task-master/tree/main and then feed the generated tasks to Aider?